LEARNING LABS

Collaboration Spaces

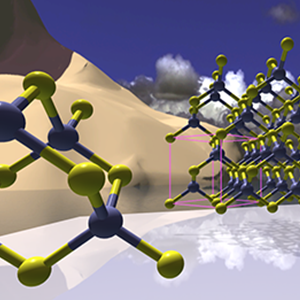

When Oculus announced their Touch Controllers almost a year ago, I knew their arrival would change whatever UI/UX development I was doing at that moment. I prepared as best I could by designing ChemSim-VR for two input devices: an XBox gamepad controller (known), and Oculus Touch controllers (unknown). Vive's hand-held controllers were shipping and I knew what they were capable of, so it wasn't hard to imagine what their competition might do.

In commercial software app development this is relatively common. Relevant product design requires consideration of new hardware before it arrives. It also means putting your best foot forward whatever your constraints happen to be. In my case I knew I wanted ChemSim-VR to be more than an observational experience, cruising around with a joystick looking at molecules, watching instructional animation, and listening to a narrator. So I chose a starting place and a UI design that could be readily modified and expanded once my Oculus Touch controllers arrived.

I've read through the Oculus Touch SDK documentation and written some code for them and I can say without hesitation these are some exciting, ergonomically natural, HID-compliant devices. Within the Unity game engine, the API for Touch controllers is the updated UnityInput Manager class, familiar to anyone who's written controls for the XBox gamepad. The image above is a screencap showing how the controllers and some off-the-shelf Oculus Avatar SDK hands look in-world. It's likely that Oculus Touch controllers will see only minor modification while head mounted displays like the Rift will evolve rapidly in the coming months and years.

T.M.Wilcox, January, 2017

Collaboration Spaces

The Ultimate PDA

Learning To Code

VR Interface Mock-Ups

Amazon's Lumberyard Engine

Samsung Gear-VR Controller

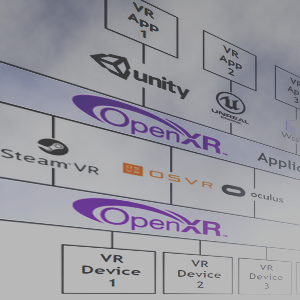

Standards For Virtual Reality

Writing Design Documents

Making 3D Objects for Virtual Reality

Inside-Out and Outside-In Head Tracking

Making a Puzzle App With Unity

HoloLens Spectator View

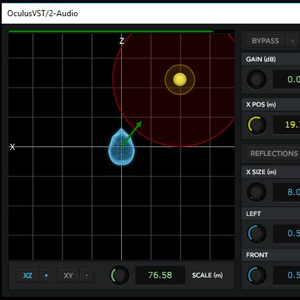

Spatialized Audio For Virtual Reality

Physical Vs. Virtual Campus Expansion

Head-Up GUI Design For VR

Windows 10 Creators Update

Visualizing Historic Buildings In VR

Sansar First Look

Accurate Motion Capture With Vive Trackers

Interface Design For Oculus Touch Controllers